Intuitive 3D Control of a Quadrotor in User Proximity with Pointing Gestures

This page contains supplementary information for our submission at ICRA 2020 conference.

We consider the case in which an operator needs to control a quadrotor in their close proximity, within visual contact, and along complex 3D trajectories. In particular, the operator controls the mobile robot by pointing at a desired target position in 3D space for the robot to reach; the robot moves there, and keeps following the updated position if the operator changes their pointing. This allows the operator to finely control the robot in a continuous fashion, and drive the robot along complex trajectories.

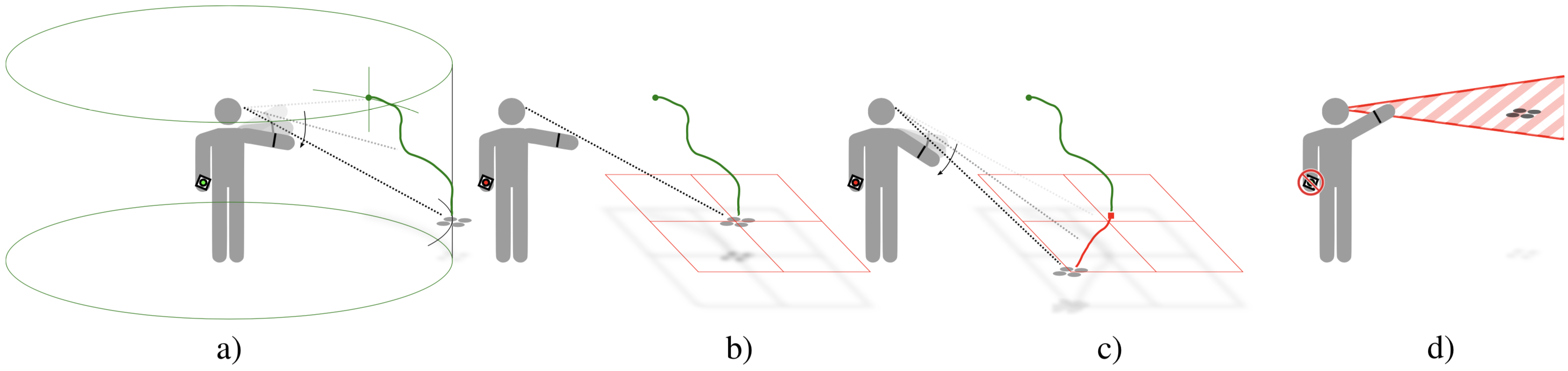

Regardless of the way it is sensed, the act of pointing by itself does not univocally identify the desired target position; in fact, a given pointing stance identifies a pointing ray in 3D space, originating at the operator’s position and extending to infinity along a given 3D direction: the target point might lie anywhere on the pointing ray. In certain scenarios we can identify this point: if the robot motion is constrained to a plane (e.g. robot moving on flat ground or flying at a fixed height) or if the required target point lies close to a surface of the world (e.g. quadruped robot walking on a generic terrain). In these cases the target point can be found as an intersection of the pointing ray with the respective surface.

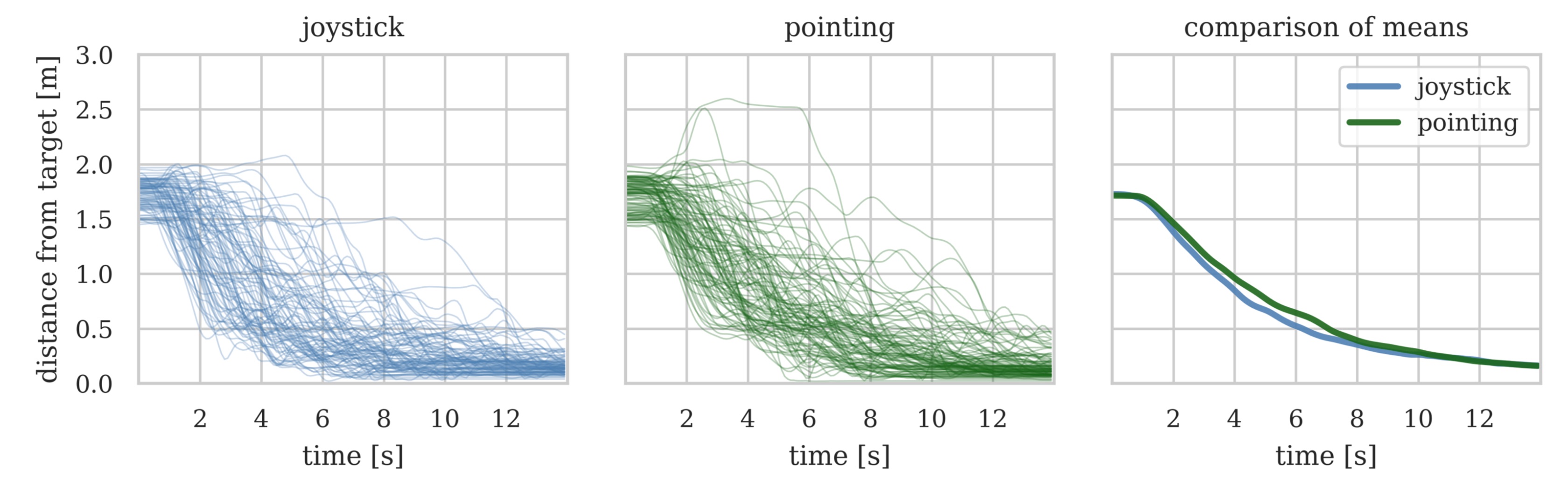

In this paper we consider the case in which we control a quadrotor that freely moves in 3D space, and these constraints do not apply. We propose and validate a pragmatic solution based on a push button acting as a simple additional input device. Results of a study involving ten subjects show that the approach performs well on a challenging 3D piloting task, where it compares favorably with joystick control.

Implementation

To define robot’s position in a free 3D space we intersect the pointing ray with a virtual workspace surface. As has been shown in our previous works, in its simplest form such surface can be a plane parallel to the ground. We extend this approach by adding the second workspace surface that also allows to control robot’s altitude. To switch between these two surfaces the user is using a simple push button control.

Among various combinations of workspace surface shapes we chose a cylinder and a horizontal plane. On the one hand, it allows to control horizontal distance between the user and the robot, and on the other hand to control robot’s altitude without changing the horizontal distance. Both surfaces are defined to pass through the robot’s current position. Additionally, the cylinder is rooted at the user’s origin.

The Figure 1 shows the main interaction stages. Note that in certain cases switching between the workspaces can be dangerous or impossible (Fig. 1, d), e.g. when there is no intersection between pointing ray and the workspace surface.

Fig 2: Visualization of the segments of trajectories performed with pointing. The green curves represent parts performed using cylinder workspace, while the red ones using the horizontal plane workspace. The short cylinders represent the targets and the tall thin cylinder represents the user.

Fig 3: Visualization of the segments of trajectories performed with joystick.

Experiments

We experimentally shown the viability of this approach and found that our method compares favorably with commodity joystick controllers.

Please refer to supplementary appendix for discussion on chosen virtual shapes and for additional details on experimental setup.

Code and Datasets

The code and datasets to reproduce the results presented in this work can be found here.

The ROS packages source code: https://github.com/idsia-robotics/volaly.

Acknowledgment

This work was partially supported by the Swiss National Science Foundation (SNSF) through the National Centre of Competence in Research (NCCR) Robotics.

Related Publications

- B. Gromov, J. Guzzi, L. Gambardella, and A. Giusti, “Intuitive 3D Control of a Quadrotor in User Proximity with Pointing Gestures,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 5964-5971.

- B. Gromov, G. Abbate, L. Gambardella, and A. Giusti, “Proximity Human-Robot Interaction Using Pointing Gestures and a Wrist-mounted IMU,” in 2019 IEEE International Conference on Robotics and Automation (ICRA), 2019, pp. 8084–8091.

- B. Gromov, L. Gambardella, and A. Giusti, “Robot Identification and Localization with Pointing Gestures,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3921–3928.