Robot Identification and Localization with Pointing Gestures

The work has been presented at International Conference on Intelligent Robots and Systems (IROS), October 1-5, 2018, Madrid, Spain.

We demonstrate a novel approach to localize a mobile robot with respect to an operator in its proximity through a simple pointing gesture interaction.

The code and datasets to reproduce the results presented in the paper can be found here.

The interaction consists of two phases:

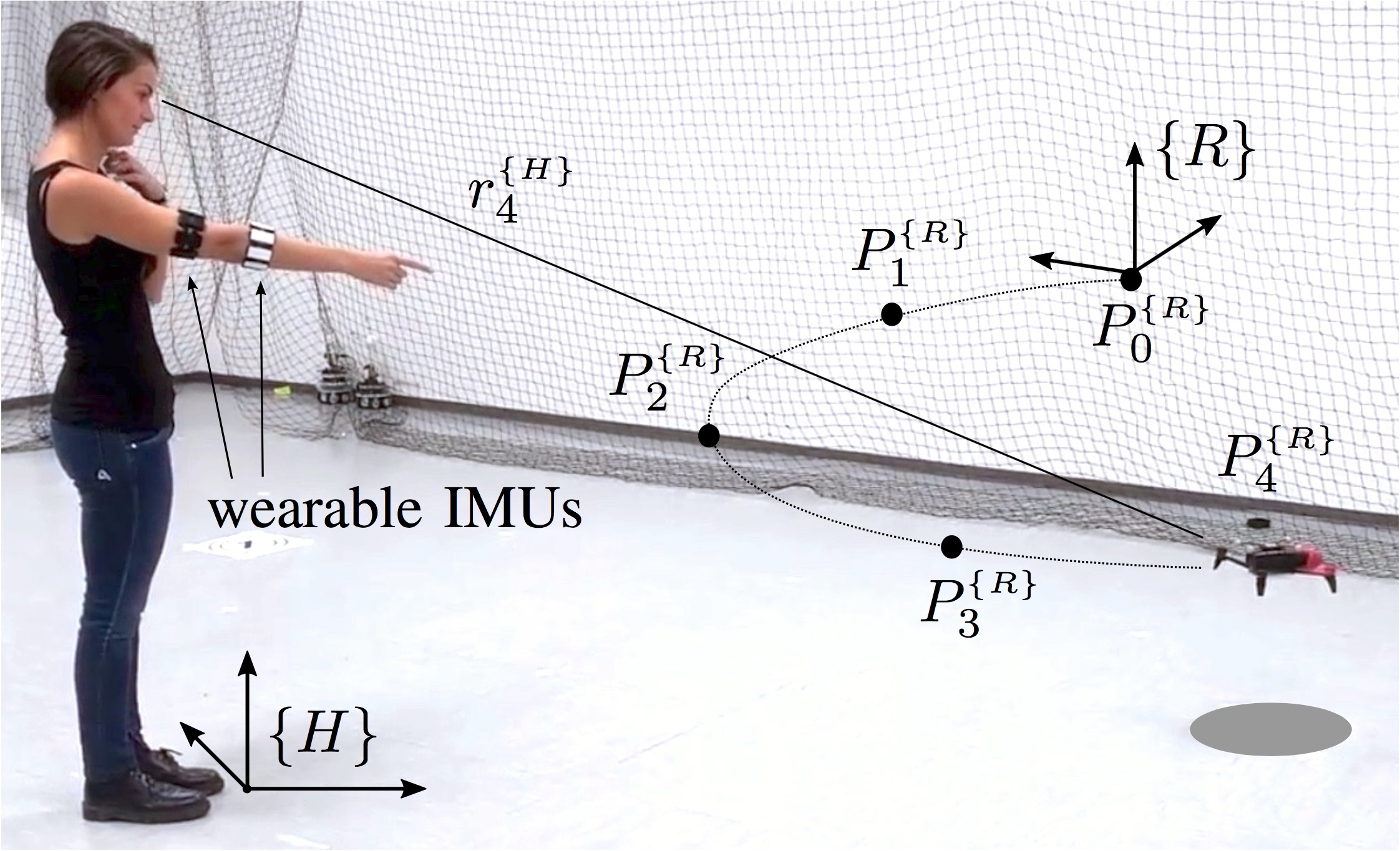

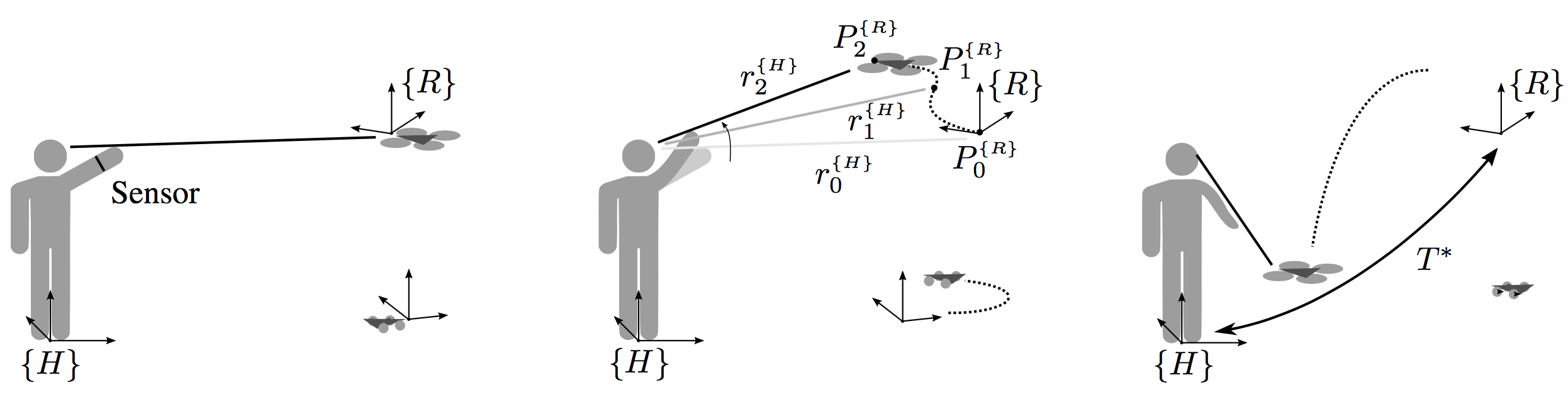

- Phase 1: Operator points at the robot they want to interact with and trigger the start of interaction.

- Phase 2: Robot starts moving along a predefined trajectory; the operator keeps following robot’s position with a pointing gesture; the system acquires a set of pairs of pointing rays \( r_i \) in operator’s frame and robot positions \( P_i \) in robot’s frame, and establishes the coordinate transformation \( T^* \) between the two frames using nonlinear optimization procedure.

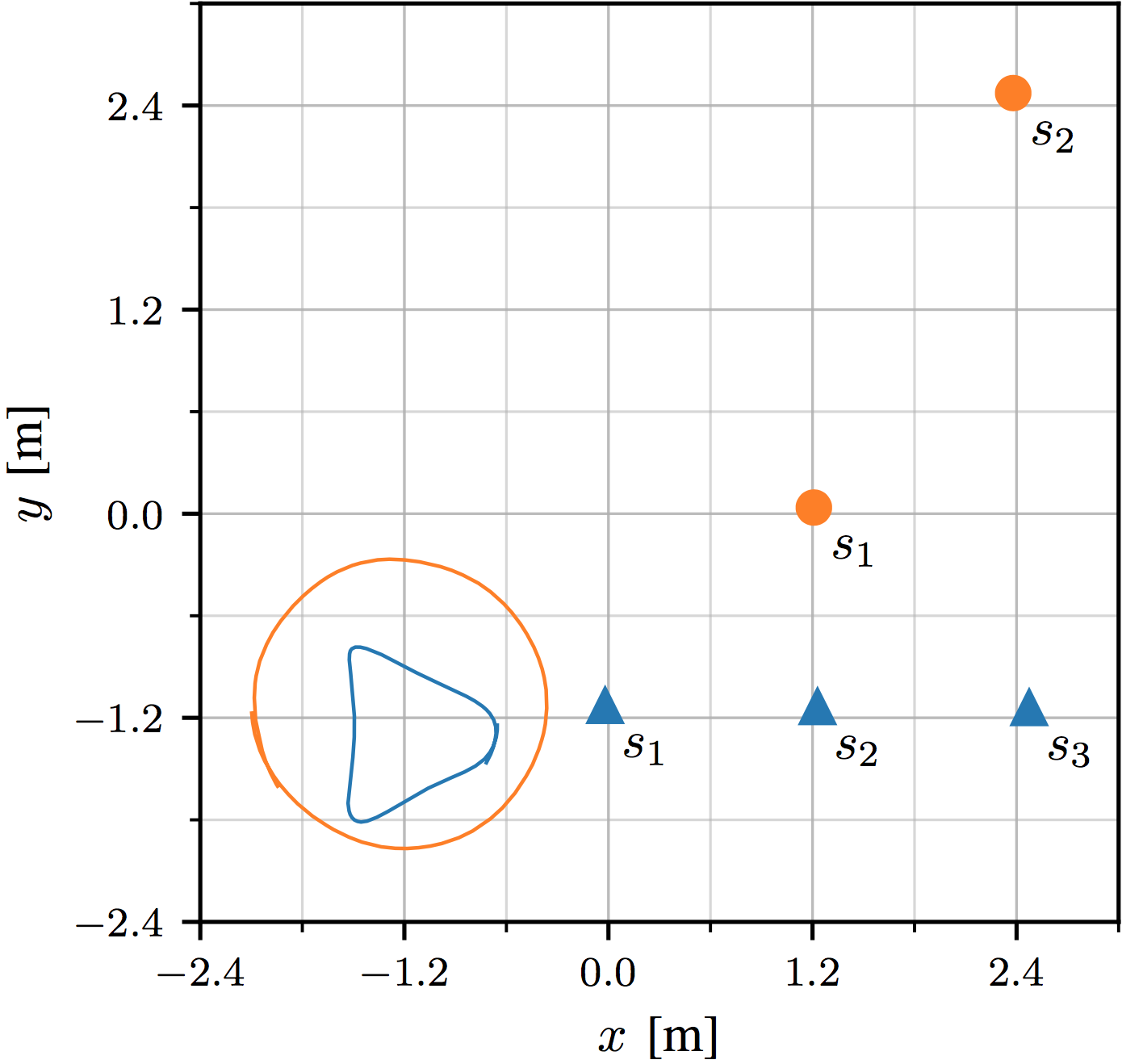

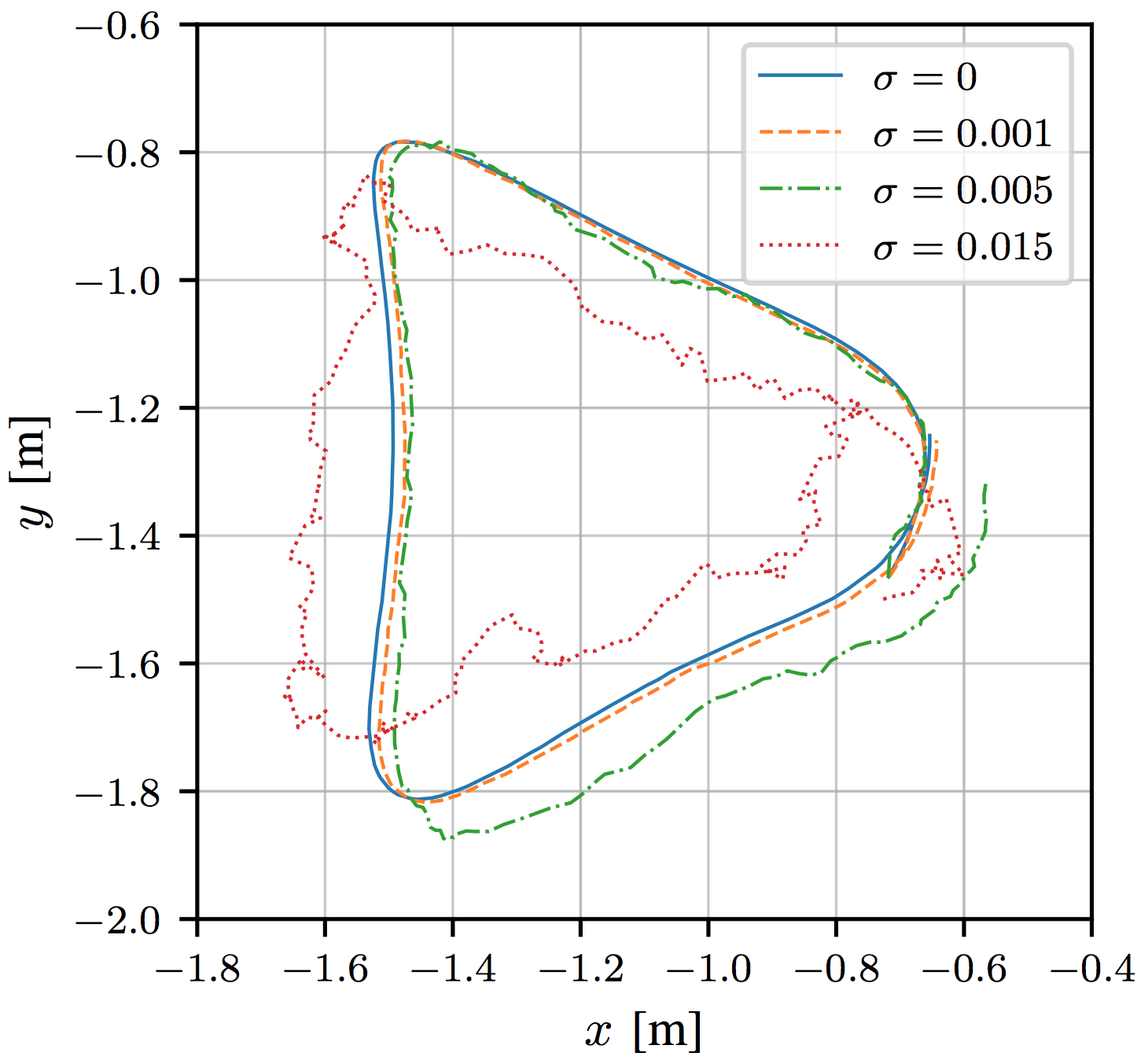

The data (see data/ folder) was acquired using two trajectories depicted bellow (left). We also distorted the ground truth trajectories with a simple visual-odometry error model to check how does our algorithm cope with errors in sensory information of a robot.

The residual error of the nonlinear optimization procedure can be used to identify the robot that was pointed at by operator among multiple moving robots. In this case the above described algorithm changes as follows:

Please refer to the paper to see results and discussion.

Live Demo @HUBweek (Boston, MA)

We demonstrated our system live on October 8-9, 2018 at the Aerial Futures: The Drone Frontiers event during the HUBweek in Boston, MA, USA.

This implementation uses the MetaMotionR+ bracelet (similar to a smartwatch) and the Crazyflie 2.0 drone. Note that we do not use any external localization system, the drone is controlled directly in its (visual) odometry frame.

Acknowledgment

This work was partially supported by the Swiss National Science Foundation (SNSF) through the National Centre of Competence in Research (NCCR) Robotics.

Publications

- B. Gromov, L. Gambardella, and A. Giusti, “Robot Identification and Localization with Pointing Gestures,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3921–3928.