Proximity HRI Using Pointing Gestures and a Wrist-mounted IMU

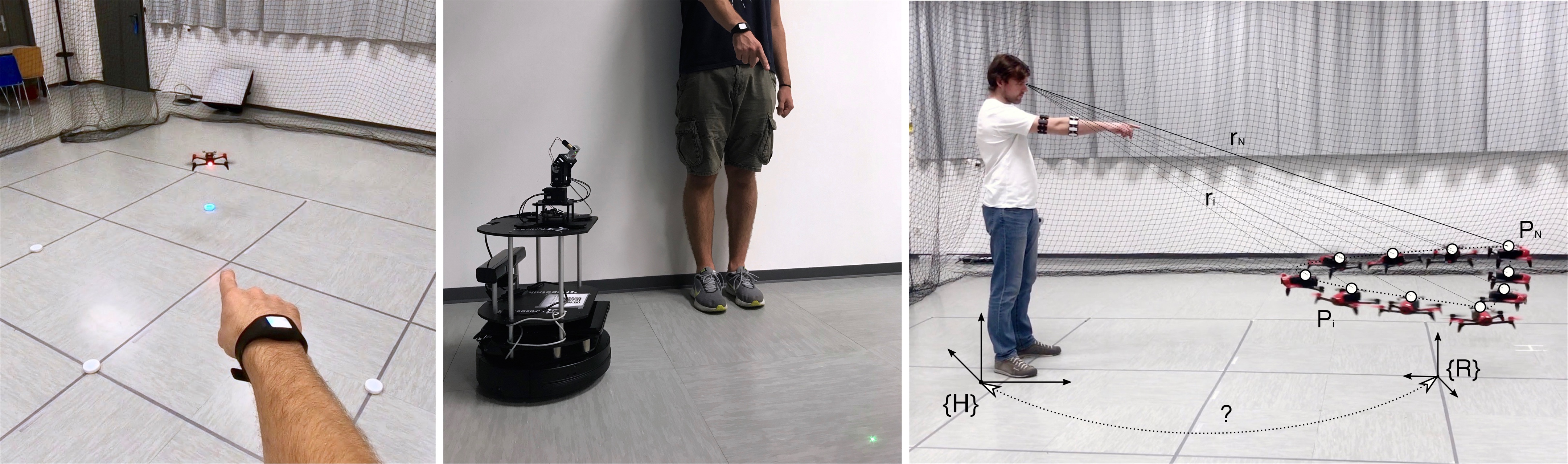

We demonstrate a complete implementation of a human-robot interaction pipeline that uses pointing gestures acquired with an inexpensive wrist-mounted IMU. Our approach allows to localize, identify, select, and guide a robot in the direct line of sight of the operator by a simple pointing gesture.

The interaction starts when the operator points at and keeps following the moving robot for a few seconds. During that time we estimate the coordinate frame transformation between the operator and the robot. After that the operator can guide the robot in their vicinity by pointing at desired locations on the ground.

The core of the system is based on our recently published localization method. It allows to establish a transformation between the reference frames of a moving robot and the operator who continuously points at it. For this, we synchronize each pointing ray expressed in operator’s reference frame with the robot’s current position expressed in its odometry frame. These synchronized pairs are then supplied to a simple optimization procedure that finds the coordinate transformation between the operator and the robot (Figure 1, right).

The performance of the interface crucially relies on operator’s perception. Due to simplifications in the pointing model that we use and various sensory errors, the estimated frame transformations are expected to be imprecise. We provide live feedback during the entire course of interaction to deal with this problem. The operator in this case can timely adapt to any misalignments.

When dealing with fast moving robots, such as quadrotors, the live feedback is naturally provided by the robot’s own body. Contrary, using the same method for slow (ground) robots can be very inefficient. We suggest to use a simple pan-tilt laser turret on top of such robots. The turret shines the laser on the ground at known coordinates and the operator points at it instead of the robot itself.

Experiments

In this work we experimentally show the viability of our approach.

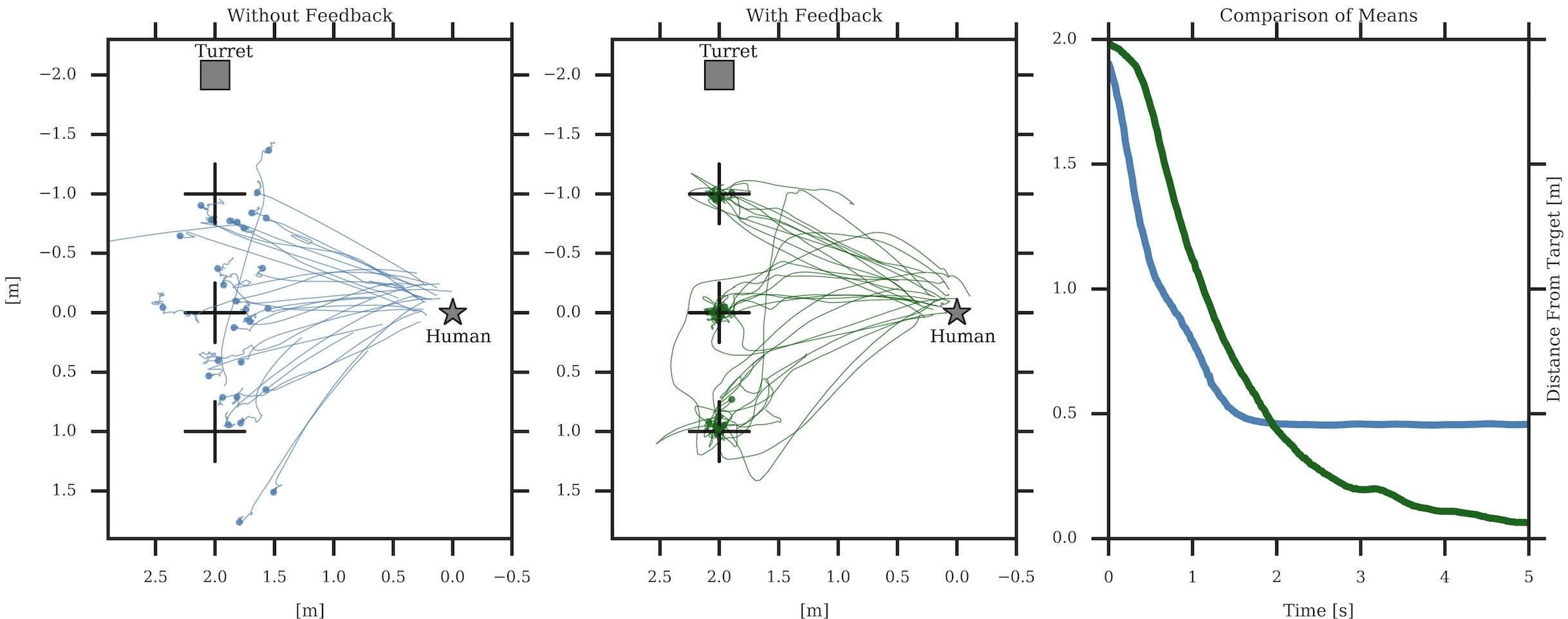

Visual feedback

We first study the influence of availability of visual feedback on pointing accuracy using the proposed pointing interface. For this, we asked subjects to sequentially point at several targets on the floor in front of them. First, no additional visual feedback is provided and they have to rely solely on their perception (such as in natural setting). Second, the visual feedback is given in the form of a laser dot, that is projected at the location of reconstructed pointing location, i.e. the location where the system thinks the user have pointed at.

The data (Figure 2) shows that, without feedback, users quickly reach an average distance from the target of 0.5 m but do not improve any further; this is expected as the system has intrinsic inaccuracies (for example in the reconstruction of pointing rays \( r_i \) ) which the user is unable to see and correct. When the feedback is provided, distance decreases to almost 0 within 5 seconds.

This demonstrates that real-time feedback—provided with a laser or with the robot’s own position—is a key component in our approach.

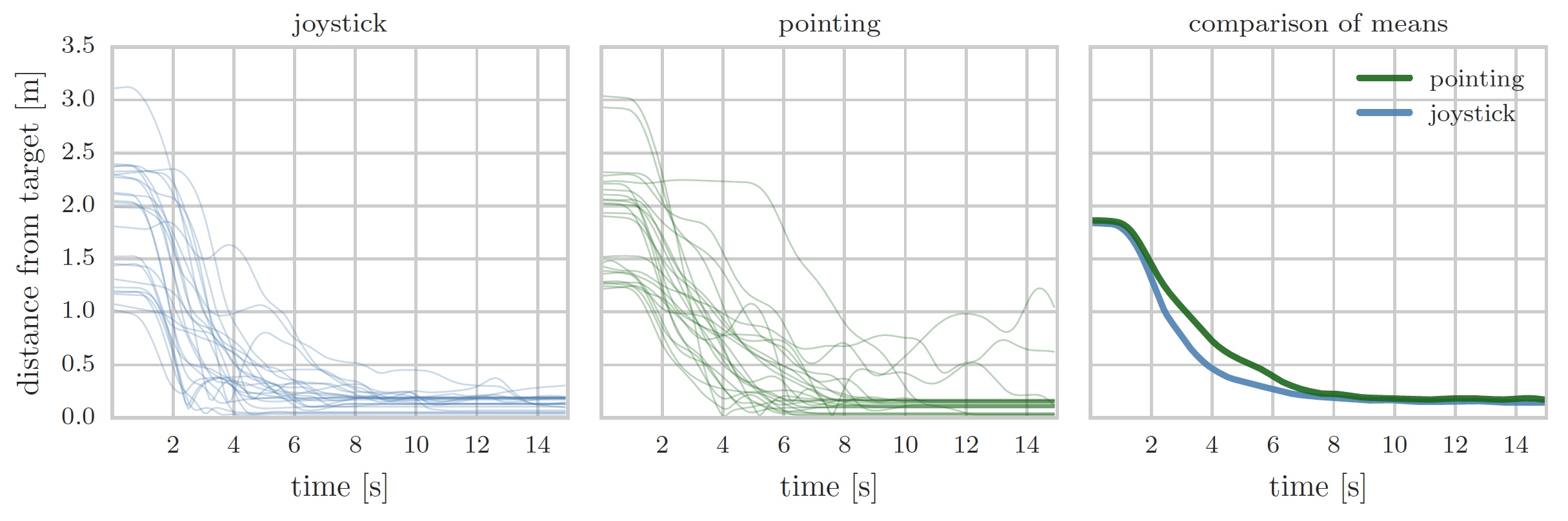

Joystick vs. Pointing

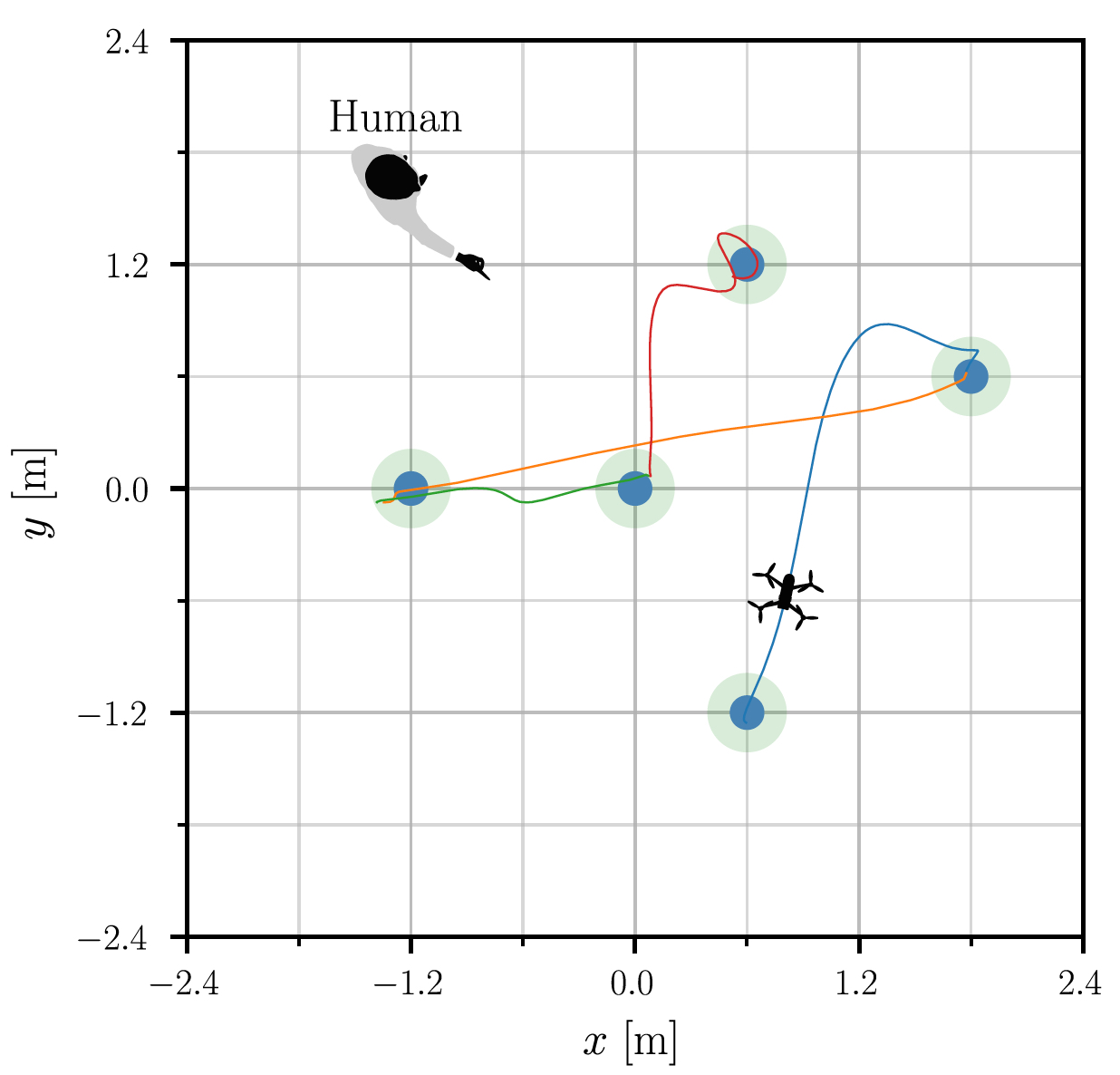

In the next experiment we quantify and compare the performance of operators in a point-to-goal task using a conventional joystick (Logitech F710) and the proposed pointing interface. The task consists in guiding a quadrotor through a random sequence of five static waypoints (Figure 3).

The waypoints change their color to guide the operator through the experimental sequence.

The code and datasets to reproduce the results presented in this work can be found here.

Latest Version (March 2019)

The latest version of the system is implemented on a lightweight drone Crazyflie 2.0:

Note: this implementation and the video were presented at Human-Robot Interaction (HRI) 2019 conference where it received the Best Demo and Honorable mention (Best Video according to reviewers) awards.

Acknowledgment

This work was partially supported by the Swiss National Science Foundation (SNSF) through the National Centre of Competence in Research (NCCR) Robotics.

Publications

-

B. Gromov, G. Abbate, L. Gambardella, and A. Giusti, “Proximity Human-Robot Interaction Using Pointing Gestures and a Wrist-mounted IMU,” in 2019 IEEE International Conference on Robotics and Automation (ICRA), 2019, pp. 8084–8091.

@inproceedings{gromov2019proximity, author = {Gromov, Boris and Abbate, Gabriele and Gambardella, Luca and Giusti, Alessandro}, title = {Proximity Human-Robot Interaction Using Pointing Gestures and a Wrist-mounted {IMU}}, booktitle = {2019 IEEE International Conference on Robotics and Automation (ICRA)}, pages = {8084-8091}, year = {2019}, month = may, doi = {10.1109/ICRA.2019.8794399}, issn = {2577-087X}, video = {https://youtu.be/hyh_5A4RXZY}, } -

B. Gromov, J. Guzzi, G. Abbate, L. Gambardella, and A. Giusti, “Video: Pointing Gestures for Proximity Interaction,” in HRI ’19: 2019 ACM/IEEE International Conference on Human-Robot Interaction, March 11–14, 2019, Daegu, Rep. of Korea, 2019.

@inproceedings{gromov2019video, author = {Gromov, Boris and Guzzi, J{\'e}r{\^o}me and Abbate, Gabriele and Gambardella, Luca and Giusti, Alessandro}, title = {Video: Pointing Gestures for Proximity Interaction}, booktitle = {HRI~'19: 2019 ACM/IEEE International Conference on Human-Robot Interaction, March 11--14, 2019, Daegu, Rep. of Korea}, conference = {2019 ACM/IEEE International Conference on Human-Robot Interaction}, location = {Daegu, Rep. of Korea}, year = {2019}, month = mar, video = {https://youtu.be/yafy-HZMk_U}, doi = {10.1109/HRI.2019.8673020}, } -

B. Gromov, J. Guzzi, L. Gambardella, and A. Giusti, “Demo: Pointing Gestures for Proximity Interaction,” in HRI ’19: 2019 ACM/IEEE International Conference on Human-Robot Interaction, March 11–14, 2019, Daegu, Rep. of Korea, 2019.

@inproceedings{gromov2019demo, author = {Gromov, Boris and Guzzi, J{\'e}r{\^o}me and Gambardella, Luca and Giusti, Alessandro}, title = {Demo: Pointing Gestures for Proximity Interaction}, booktitle = {HRI~'19: 2019 ACM/IEEE International Conference on Human-Robot Interaction, March 11--14, 2019, Daegu, Rep. of Korea}, conference = {2019 ACM/IEEE International Conference on Human-Robot Interaction}, location = {Daegu, Rep. of Korea}, year = {2019}, month = mar, doi = {10.1109/HRI.2019.8673329}, } -

B. Gromov, L. Gambardella, and A. Giusti, “Robot Identification and Localization with Pointing Gestures,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3921–3928.

@inproceedings{gromov2018robot, author = {Gromov, Boris and Gambardella, Luca and Giusti, Alessandro}, title = {Robot Identification and Localization with Pointing Gestures}, booktitle = {2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, year = {2018}, pages = {3921-3928}, keywords = {Robot sensing systems;Robot kinematics;Solid modeling;Manipulators;Drones;Three-dimensional displays}, doi = {10.1109/IROS.2018.8594174}, issn = {2153-0866}, month = oct, video = {https://youtu.be/VaQ3aZBf_uE}, }