About

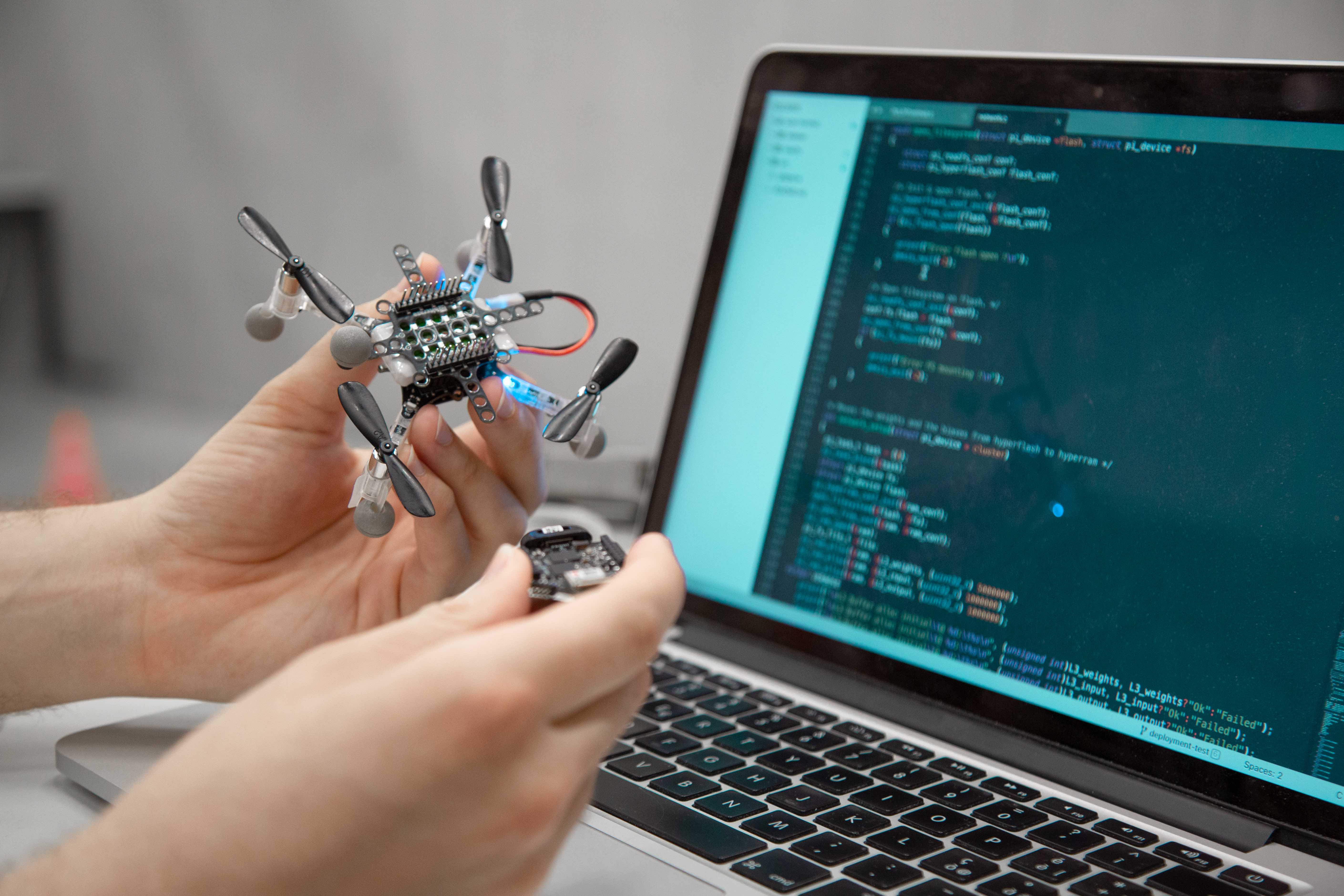

The NanoRobotics research group at IDSIA focuses its scientific effort on improving the onboard intelligence of ultra-constrained miniaturized robotic platforms aiming at the same capability as biological systems. By leveraging Artificial Intelligence-based (AI) algorithms, some of the research areas encompass optimized ultra-low power embedded Cyber-Physical Systems (CPS), deep learning models for energy-efficient perception pipelines, multi-modal ultra-low power sensor fusion, Human-Robot Interaction (HRI) applications, and Cyber-secure systems for Microcontroller Units-class (MCUs).

Thanks to the close partnership with the Parallelel Ultra-low Power international research project (PULP Platform), the NanoRobotics group at IDSIA boasts strong collaborations with the ETH Zürich, the University of Bologna, and the Polytechnic University of Torino.

People

Dr. Daniele Palossi

Senior Researcher,

Group lead

Webpage

Dr. Lorenzo Lamberti

Postdoctoral Researcher

Webpage

Elia Cereda

PhD Student

Webpage

Luca Crupi

PhD Student

Webpage

News

Feb 02 2025

Our new journal article, “An Efficient Ground-aerial Transportation System for Pest Control Enabled by AI-based Autonomous Nano-UAVs,” has just been accepted for publication in the ACM Journal on Autonomous Transportation Systems. arXiv preprint

Jan 27 2025

Our new paper, “A Map-free Deep Learning-based Framework for Gate-to-Gate Monocular Visual Navigation aboard Miniaturized Aerial Vehicles,” has just been accepted at IEEE ICRA’25. arXiv preprint demo-video

Jul 11 2024

Our new paper, “Training on the Fly: On-device Self-supervised Learning aboard Nano-drones within 20mW,” has just been accepted for publication in the IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD) and for oral presentation at CODES+ISSS’24 during the next ESWEEK. arXiv preprint

Jul 5 2024

Our new journal article, “Distilling Tiny and Ultra-fast Deep Neural Networks for Autonomous Navigation on Nano-UAVs,” in collaboration with UNIBO, TII, and ETHZ, has just been accepted for publication in the IEEE Internet of Things Journal. arXiv preprint

Jun 19 2024

The paper, “Multi-resolution Rescored ByteTrack for Video Object Detection on Ultra-low-power Embedded Systems,” coauthored by a UNIBO, IDSIA, KUL, and ETHZ, got the Best Paper Award at the 20th Embedded Vision Workshop in conjunction with the CVPR Conference in Seattle, Washington, USA. CVF open access

Apr 10 2024

Our new journal article, “Vision-state Fusion: Improving Deep Neural Networks for Autonomous Robotics,” has just been accepted for publication in the Springer Journal of Intelligent & Robotic Systems. Springer open access.

Mar 15 2024

Our new paper, “Fusing Multi-sensor Input with State Information on TinyML Brains for Autonomous Nano-drones,” was presented at the European Robotics Forum 2024. arXiv preprint.

Mar 15 2024

Our new paper, “A Deep Learning-based Pest Insect Monitoring System for Ultra-low Power Pocket-sized Drones,” was accepted for publication in the Wi-DroIT 2024 Workshop in conjunction with the IEEE International Conference on Distributed Computing in Smart Systems and Internet of Things (DCOSS). arXiv preprint

Jan 29, 2024

Two new papers have just been accepted at IEEE ICRA’24. “On-device Self-supervised Learning of Visual Perception Tasks aboard Hardware-limited Nano-quadrotors,” arXiv preprint demo-video and “High-throughput Visual Nano-drone to Nano-drone Relative Localization using Onboard Fully Convolutional Networks” arXiv preprint demo-video.

Jan 26, 2024

Our new paper, “Self-Supervised Learning of Visual Robot Localization Using LED State Prediction as a Pretext Task,” has just been accepted for publication in IEEE Robotics and Automation Letters (RA-L) arXiv preprint repository.

Jan 19, 2024

We contributed to the novel paper “A Heterogeneous RISC-V based SoC for Secure Nano-UAV Navigation,” which was just accepted at the IEEE Transactions on Circuits and Systems I (TCAS-I). arXiv preprint.

Dec 18, 2023

Our new paper, “Adaptive Deep Learning for Efficient Visual Pose Estimation aboard Ultra-low-power Nano-drones,” in collaboration with POLITO, has just been accepted at ACM Design, Automation and Test in Europe Conference (DATE’24). arXiv preprint.

Dec 15, 2023

Our new paper, “A Sim-to-Real Deep Learning-based Framework for Autonomous Nano-drone Racing,” has just been accepted for publication in IEEE Robotics and Automation Letters (RA-L) arXiv preprint video. This work describes our autonomous navigation and obstacle avoidance system for palm-sized quadrotors, developed in collaboration with Università di Bologna and TII Abu Dhabi and winner of the 1st Nanocopter AI Challenge at IMAV 2022, Delft, Netherlands.

Sep 25, 2023

Our new paper, “Secure Deep Learning-based Distributed Intelligence on Pocket-sized Drones,” received the Best Paper Award at the 2nd Workshop on Security and Privacy in Connected Embedded Systems (SPICES) during the ACM EWSN’23 conference in Rende, Italy. Our work proposes a secure computational paradigm for deep learning workloads that allows resource-constrained devices, such as nano-drones, to take advantage of powerful remote computation nodes while preserving security against external attackers.

Jun 29, 2023

Our new paper, “Secure Deep Learning-based Distributed Intelligence on Pocket-sized Drones,” has just been accepted at ACM EWSN’23. arXiv preprint demo-video.

Jun 21, 2023

Our new paper, “Sim-to-Real Vision-depth Fusion CNNs for Robust Pose Estimation Aboard Autonomous Nano-quadcopters,” has just been accepted at IEEE IROS’23. arXiv preprint demo-video.

May 4, 2023

We contributed to the novel paper “Shaheen: An Open, Secure, and Scalable RV64 SoC for Autonomous Nano-UAVs,” just accepted at HotChips’23.

Jan 20, 2023

We contributed to the novel paper “Cyber Security aboard Micro Aerial Vehicles: An OpenTitan-based Visual Communication Use Case,” just accepted at IEEE ISCAS’23. arXiv preprint demo-video.

Jan 17, 2023

Two new papers have just been accepted at IEEE ICRA’23. “Deep Neural Network Architecture Search for Accurate Visual Pose Estimation aboard Nano-UAVs,” arXiv preprint demo-video and “Ultra-low Power Deep Learning-based Monocular Relative Localization Onboard Nano-quadrotors” arXiv preprint demo-video.

Publications

Vision-state Fusion: Improving Deep Neural Networks for Autonomous Robotics

E. Cereda, S. Bonato, M. Nava, A. Giusti, and D. Palossi

in Journal of Intelligent & Robotic Systems, vol. 110, 58 (2024)

@article{cereda2024vision,

author={Cereda, Elia and Bonato, Stefano and Nava, Mirko and Giusti, Alessandro and Palossi, Daniele},

journal={Journal of Intelligent & Robotic Systems},

title={Vision-state Fusion: Improving Deep Neural Networks for Autonomous Robotics},

year={2024},

volume={110},

number={58},

doi={10.1007/s10846-024-02091-6}

}

Abstract

Vision-based deep learning perception fulfills a paramount role in robotics, facilitating solutions to many challenging scenarios, such as acrobatic maneuvers of autonomous unmanned aerial vehicles (UAVs) and robot-assisted high-precision surgery. Control-oriented end-to-end perception approaches, which directly output control variables for the robot, commonly take advantage of the robot’s state estimation as an auxiliary input. When intermediate outputs are estimated and fed to a lower-level controller, i.e., mediated approaches, the robot’s state is commonly used as an input only for egocentric tasks, which estimate physical properties of the robot itself. In this work, we propose to apply a similar approach for the first time – to the best of our knowledge – to non-egocentric mediated tasks, where the estimated outputs refer to an external subject. We prove how our general methodology improves the regression performance of deep convolutional neural networks (CNNs) on a broad class of non-egocentric 3D pose estimation problems, with minimal computational cost. By analyzing three highly-different use cases, spanning from grasping with a robotic arm to following a human subject with a pocket-sized UAV, our results consistently improve the R regression metric, up to +0.51, compared to their stateless baselines. Finally, we validate the in-field performance of a closed-loop autonomous cm-scale UAV on the human pose estimation task. Our results show a significant reduction, i.e., 24% on average, on the mean absolute error of our stateful CNN, compared to a State-of-the-Art stateless counterpart.

On-device Self-supervised Learning of Visual Perception Tasks aboard Hardware-limited Nano-quadrotors

E. Cereda, M. Rusci , A. Giusti, and D. Palossi

in Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024

@inproceedings{cereda2024ondevice,

title={On-device Self-supervised Learning of Visual Perception Tasks aboard Hardware-limited Nano-quadrotors},

author={Elia Cereda and Manuele Rusci and Alessandro Giusti and Daniele Palossi},

year={2024},

eprint={2403.04071},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Abstract

Sub-50g nano-drones are gaining momentum in both academia and industry. Their most compelling applications rely on onboard deep learning models for perception despite severe hardware constraints (i.e., sub-100mW processor). When deployed in unknown environments not represented in the training data, these models often underperform due to domain shift. To cope with this fundamental problem, we propose, for the first time, on-device learning aboard nano-drones, where the first part of the in-field mission is dedicated to self-supervised fine-tuning of a pre-trained convolutional neural network (CNN). Leveraging a real-world vision-based regression task, we thoroughly explore performance-cost trade-offs of the fine-tuning phase along three axes: i) dataset size (more data increases the regression performance but requires more memory and longer computation); ii) methodologies (e.g., fine-tuning all model parameters vs. only a subset); and iii) self-supervision strategy. Our approach demonstrates an improvement in mean absolute error up to 30% compared to the pre-trained baseline, requiring only 22s fine-tuning on an ultra-low-power GWT GAP9 System-on-Chip. Addressing the domain shift problem via on-device learning aboard nano-drones not only marks a novel result for hardware-limited robots but lays the ground for more general advancements for the entire robotics community.

High-throughput Visual Nano-drone to Nano-drone Relative Localization using Onboard Fully Convolutional Networks

L. Crupi, A. Giusti, and D. Palossi

in Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024

@inproceedings{crupi2024highthroughput,

title={High-throughput Visual Nano-drone to Nano-drone Relative Localization using Onboard Fully Convolutional Networks},

author={Luca Crupi and Alessandro Giusti and Daniele Palossi},

year={2024},

eprint={2402.13756},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Abstract

Relative drone-to-drone localization is a fundamental building block for any swarm operations. We address this task in the context of miniaturized nano-drones, i.e., 10cm in diameter, which show an ever-growing interest due to novel use cases enabled by their reduced form factor. The price for their versatility comes with limited onboard resources, i.e., sensors, processing units, and memory, which limits the complexity of the onboard algorithms. A traditional solution to overcome these limitations is represented by lightweight deep learning models directly deployed aboard nano-drones. This work tackles the challenging relative pose estimation between nano-drones using only a gray-scale low-resolution camera and an ultra-low-power System-on-Chip (SoC) hosted onboard. We present a vertically integrated system based on a novel vision-based fully convolutional neural network (FCNN), which runs at 39Hz within 101mW onboard a Crazyflie nano-drone extended with the GWT GAP8 SoC. We compare our FCNN against three State-of-the-Art (SoA) systems. Considering the best-performing SoA approach, our model results in an R-squared improvement from 32 to 47% on the horizontal image coordinate and from 18 to 55% on the vertical image coordinate, on a real-world dataset of 30k images. Finally, our in-field tests show a reduction of the average tracking error of 37% compared to a previous SoA work and an endurance performance up to the entire battery lifetime of 4 minutes.

Self-supervised Learning of Visual Robot Localization Using LED State Prediction as a Pretext Task

M. Nava, N. Carlotti, L. Crupi, D. Palossi, and A. Giusti

in IEEE Robotics and Automation Letters (RA-L), 2024

Abstract

We propose a novel self-supervised approach for learning to visually localize robots equipped with controllable LEDs. We rely on a few training samples labeled with position ground truth and many training samples in which only the LED state is known, whose collection is cheap. We show that using LED state prediction as a pretext task significantly helps to learn the visual localization end task. The resulting model does not require knowledge of LED states during inference. We instantiate the approach to visual relative localization of nano-quadrotors: experimental results show that using our pretext task significantly improves localization accuracy (from 68.3% to 76.2%) and outperforms alternative strategies, such as a supervised baseline, model pre-training, and an autoencoding pretext task. We deploy our model aboard a 27-g Crazyflie nano-drone, running at 21 fps, in a position-tracking task of a peer nano-drone. Our approach, relying on position labels for only 300 images, yields a mean tracking error of 4.2 cm versus 11.9 cm of a supervised baseline model trained without our pretext task. Videos and code of the proposed approach are available at https://github.com/idsia-robotics/leds-as-pretext.

A Heterogeneous RISC-V-based SoC for Secure Nano-UAV Navigation

L. Valente, A. Nadalini, A. Veeran, M. Sinigaglia, B. Sa, N. Wistoff, Y. Tortorella, S. Benatti, R. Psiakis, A. Kulmala, B. Mohammad, S. Pinto, D. Palossi, L. Benini, and D. Rossi

in IEEE Transactions on Circuits and Systems I: Regular Papers (TCAS), 2024

@inproceedings{valente2024hetero,

title={A Heterogeneous RISC-V Based SoC for Secure Nano-UAV Navigation},

author={Valente, Luca and Nadalini, Alessandro and Veeran, Asif Hussain Chiralil and Sinigaglia, Mattia and Sá, Bruno and Wistoff, Nils and Tortorella, Yvan and Benatti, Simone and Psiakis, Rafail and Kulmala, Ari and Mohammad, Baker and Pinto, Sandro and Palossi, Daniele and Benini, Luca and Rossi, Davide},

journal={IEEE Transactions on Circuits and Systems I: Regular Papers},

year={2024},

volume={},

number={},

pages={1-14},

doi={10.1109/TCSI.2024.3359044}

}

Abstract

The rapid advancement of energy-efficient parallel ultra-low-power (ULP) microcontroller units (MCUs) is enabling the development of autonomous nano-sized unmanned aerial vehicles (nano-UAVs). These sub-10cm drones represent the next generation of unobtrusive robotic helpers and ubiquitous smart sensors. However, nano-UAVs face significant power and payload constraints while requiring advanced computing capabilities akin to standard drones, including real-time Machine Learning (ML) performance and the safe co-existence of general-purpose and real-time OSs. Although some advanced parallel ULP MCUs offer the necessary ML computing capabilities within the prescribed power limits, they rely on small main memories (<1MB) and microcontroller-class CPUs with no virtualization or security features, and hence only support simple bare-metal runtimes. In this work, we present Shaheen, a 9mm2 200mW SoC implemented in 22nm FDX technology. Differently from state-of-the-art MCUs, Shaheen integrates a Linux-capable RV64 core, compliant with the v1.0 ratified Hypervisor extension and equipped with timing channel protection, along with a low-cost and low-power memory controller exposing up to 512MB of off-chip low-cost low-power HyperRAM directly to the CPU. At the same time, it integrates a fully programmable energy- and area-efficient multi-core cluster of RV32 cores optimized for general-purpose DSP as well as reduced- and mixed-precision ML. To the best of the authors' knowledge, it is the first silicon prototype of a ULP SoC coupling the RV64 and RV32 cores in a heterogeneous host+accelerator architecture fully based on the RISC-V ISA. We demonstrate the capabilities of the proposed SoC on a wide range of benchmarks relevant to nano-UAV applications. The cluster can deliver up to 90GOp/s and up to 1.8TOp/s/W on 2-bit integer kernels and up to 7.9GFLOp/s and up to 150GFLOp/s/W on 16-bit FP kernels.

A Sim-to-Real Deep Learning-based Framework for Autonomous Nano-drone Racing

L. Lamberti, E. Cereda, G. Abbate, L. Bellone, V. J. Kartsch Morinigo, M. Barcis, A. Barcis, A. Giusti, F. Conti, and D. Palossi

in IEEE Robotics and Automation Letters (RA-L), 2024

@misc{lamberti2024imav,

author={Lamberti, Lorenzo and Cereda, Elia and Abbate, Gabriele and Bellone, Lorenzo and Morinigo, Victor Javier Kartsch and Barciś, Michał and Barciś, Agata and Giusti, Alessandro and Conti, Francesco and Palossi, Daniele},

journal={IEEE Robotics and Automation Letters},

title={A Sim-to-Real Deep Learning-Based Framework for Autonomous Nano-Drone Racing},

year={2024},

volume={9},

number={2},

pages={1899-1906},

doi={10.1109/LRA.2024.3349814}

}

Abstract

Autonomous drone racing competitions are a proxy to improve unmanned aerial vehicles' perception, planning, and control skills. The recent emergence of autonomous nano-sized drone racing imposes new challenges, as their ~10cm form factor heavily restricts the resources available onboard, including memory, computation, and sensors. This paper describes the methodology and technical implementation of the system winning the first autonomous nano-drone racing international competition: the IMAV 2022 Nanocopter AI Challenge. We developed a fully onboard deep learning approach for visual navigation trained only on simulation images to achieve this goal. Our approach includes a convolutional neural network for obstacle avoidance, a sim-to-real dataset collection procedure, and a navigation policy that we selected, characterized, and adapted through simulation and actual in-field experiments. Our system ranked 1st among seven competing teams at the competition. In our best attempt, we scored 115m of traveled distance in the allotted 5-minute flight, never crashing while dodging static and dynamic obstacles. Sharing our knowledge with the research community, we aim to provide a solid groundwork to foster future development in this field.

Adaptive Deep Learning for Efficient Visual Pose Estimation aboard Ultra-low-power Nano-drones

B. A. Motetti, L. Crupi, M. O. Elshaigi, M. Risso, D. Jahier Pagliari, D. Palossi, and A. Burrello

in Proceedings of the 2024 International Conference on Design, Automation and Test in Europe (DATE), 2024

@misc{motetti2024adaptive,

title={Adaptive Deep Learning for Efficient Visual Pose Estimation aboard Ultra-low-power Nano-drones},

author={Beatrice Alessandra Motetti and Luca Crupi and Mustafa Omer Mohammed Elamin Elshaigi and Matteo Risso and Daniele Jahier Pagliari and Daniele Palossi and Alessio Burrello},

year={2024},

eprint={2401.15236},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

Abstract

Sub-10cm diameter nano-drones are gaining momentum thanks to their applicability in scenarios prevented to bigger flying drones, such as in narrow environments and close to humans. However, their tiny form factor also brings their major drawback: ultra-constrained memory and processors for the onboard execution of their perception pipelines. Therefore, lightweight deep learning-based approaches are becoming increasingly popular, stressing how computational efficiency and energy-saving are paramount as they can make the difference between a fully working closed-loop system and a failing one. In this work, to maximize the exploitation of the ultra-limited resources aboard nano-drones, we present a novel adaptive deep learning-based mechanism for the efficient execution of a vision-based human pose estimation task. We leverage two State-of-the-Art (SoA) convolutional neural networks (CNNs) with different regression performance vs. computational costs trade-offs. By combining these CNNs with three novel adaptation strategies based on the output's temporal consistency and on auxiliary tasks to swap the CNN being executed proactively, we present six different systems. On a real-world dataset and the actual nano-drone hardware, our best-performing system, compared to executing only the bigger and most accurate SoA model, shows 28% latency reduction while keeping the same mean absolute error (MAE), 3% MAE reduction while being iso-latency, and the absolute peak performance, i.e., 6% better than SoA model.

Sim-to-Real Vision-depth Fusion CNNs for Robust Pose Estimation Aboard Autonomous Nano-quadcopters

L. Crupi, E. Cereda, A. Giusti, and D. Palossi

in Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023

@misc{crupi2023sim,

author={Crupi, Luca and Cereda, Elia and Giusti, Alessandro and Palossi, Daniele},

booktitle={2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Sim-to-Real Vision-Depth Fusion CNNs for Robust Pose Estimation Aboard Autonomous Nano-quadcopters},

year={2023},

volume={},

number={},

pages={7711-7717},

doi={10.1109/IROS55552.2023.10342162}

}

Abstract

Nano-quadcopters are versatile platforms attracting the interest of both academia and industry. Their tiny form factor, i.e., 10 cm diameter, makes them particularly useful in narrow scenarios and harmless in human proximity. However, these advantages come at the price of ultra-constrained onboard computational and sensorial resources for autonomous operations. This work addresses the task of estimating human pose aboard nano-drones by fusing depth and images in a novel CNN exclusively trained in simulation yet capable of robust predictions in the real world. We extend a commercial off-the-shelf (COTS) Crazyflie nano-drone -- equipped with a 320×240 px camera and an ultra-low-power System-on-Chip -- with a novel multi-zone (8×8) depth sensor. We design and compare different deep-learning models that fuse depth and image inputs. Our models are trained exclusively on simulated data for both inputs, and transfer well to the real world: field testing shows an improvement of 58% and 51% of our depth+camera system w.r.t. a camera-only State-of-the-Art baseline on the horizontal and angular mean pose errors, respectively. Our prototype is based on COTS components, which facilitates reproducibility and adoption of this novel class of systems.

Secure Deep Learning-based Distributed Intelligence on Pocket-sized Drones [Best Paper Award]

E. Cereda, A. Giusti, and D. Palossi

in Proceedings of the 2023 International Conference on Embedded Wireless Systems and Networks (EWSN), 2023

@misc{cereda2023secure,

author={Cereda, Elia and Giusti, Alessandro and Palossi, Daniele},

title={Secure Deep Learning-based Distributed Intelligence on Pocket-sized Drones},

year={2023},

eprint={2307.01559},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

Abstract

Palm-sized nano-drones are an appealing class of edge nodes, but their limited computational resources prevent running large deep-learning models onboard. Adopting an edge-fog computational paradigm, we can offload part of the computation to the fog; however, this poses security concerns if the fog node, or the communication link, can not be trusted. To tackle this concern, we propose a novel distributed edge-fog execution scheme that validates fog computation by redundantly executing a random subnetwork aboard our nano-drone. Compared to a State-of-the-Art visual pose estimation network that entirely runs onboard, a larger network executed in a distributed way improves the R2 score by +0.19; in case of attack, our approach detects it within 2s with 95% probability.

Deep Neural Network Architecture Search for Accurate Visual Pose Estimation aboard Nano-UAVs

E. Cereda, L. Crupi, M. Risso, A. Burrello, L. Benini, A. Giusti, D. Jahier Pagliari, and D. Palossi

in Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 6065-6071, 2023

@inproceedings{cereda2023deep,

author={Cereda, Elia and Crupi, Luca and Risso, Matteo and Burrello, Alessio and Benini, Luca and Giusti, Alessandro and Pagliari, Daniele Jahier and Palossi, Daniele},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

title={Deep Neural Network Architecture Search for Accurate Visual Pose Estimation aboard Nano-UAVs},

year={2023},

volume={},

number={},

pages={6065-6071},

doi={10.1109/ICRA48891.2023.10160369}

}

Abstract

Miniaturized autonomous unmanned aerial vehicles (UAVs) are an emerging and trending topic. With their form factor as big as the palm of one hand, they can reach spots otherwise inaccessible to bigger robots and safely operate in human surroundings. The simple electronics aboard such robots (sub-100mW) make them particularly cheap and attractive but pose significant challenges in enabling onboard sophisticated intelligence. In this work, we leverage a novel neural architecture search (NAS) technique to automatically identify several Pareto-optimal convolutional neural networks (CNNs) for a visual pose estimation task. Our work demonstrates how real-life and field-tested robotics applications can concretely leverage NAS technologies to automatically and efficiently optimize CNNs for the specific hardware constraints of small UAVs. We deploy several NAS-optimized CNNs and run them in closed-loop aboard a 27-g Crazyflie nano-UAV equipped with a parallel ultra-low power System-on-Chip. Our results improve the State-of-the-Art by reducing the in-field control error of 32% while achieving a real-time onboard inference-rate of ~10Hz@10mW and ~50Hz@90mW.

Ultra-low Power Deep Learning-based Monocular Relative Localization Onboard Nano-quadrotors

S. Bonato, S. C. Lambertenghi, E. Cereda, A. Giusti, and D. Palossi

in Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 3411-3417, 2023

@inproceedings{bonato2023ultra,

author={Bonato, Stefano and Lambertenghi, Stefano Carlo and Cereda, Elia and Giusti, Alessandro and Palossi, Daniele},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

title={Ultra-low Power Deep Learning-based Monocular Relative Localization Onboard Nano-quadrotors},

year={2023},

volume={},

number={},

pages={3411-3417},

doi={10.1109/ICRA48891.2023.10161127}

}

Abstract

Precise relative localization is a crucial functional block for swarm robotics. This work presents a novel autonomous end-to-end system that addresses the monocular relative localization, through deep neural networks (DNNs), of two peer nano-drones, i.e., sub-40g of weight and sub-100mW processing power. To cope with the ultra-constrained nano-drone platform, we propose a vertically-integrated framework, from the dataset collection to the final in-field deployment, including dataset augmentation, quantization, and system optimizations. Experimental results show that our DNN can precisely localize a 10cm-size target nano-drone by employing only low-resolution monochrome images, up to ~2m distance. On a disjoint testing dataset our model yields a mean R2 score of 0.42 and a root mean square error of 18cm, which results in a mean in-field prediction error of 15cm and in a closed-loop control error of 17cm, over a ~60s-flight test. Ultimately, the proposed system improves the State-of-the-Art by showing long-endurance tracking performance (up to 2min continuous tracking), generalization capabilities being deployed in a never-seen-before environment, and requiring a minimal power consumption of 95mW for an onboard real-time inference-rate of 48Hz.

Cyber Security aboard Micro Aerial Vehicles: An OpenTitan-based Visual Communication Use Case

M. Ciani, S. Bonato, R. Psiakis, A. Garofalo, L. Valente, S. Sugumar, A. Giusti, D. Rossi, and D. Palossi

in Proceedings of the 2023 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 1-5, 2023

@inproceedings{ciani2023cyber,

author={Ciani, Maicol and Bonato, Stefano and Psiakis, Rafail and Garofalo, Angelo and Valente, Luca and Sugumar, Suresh and Giusti, Alessandro and Rossi, Davide and Palossi, Daniele},

booktitle={2023 IEEE International Symposium on Circuits and Systems (ISCAS)},

title={Cyber Security aboard Micro Aerial Vehicles: An OpenTitan-based Visual Communication Use Case},

year={2023},

volume={},

number={},

pages={1-5},

doi={10.1109/ISCAS46773.2023.10181732}

}

Abstract

Autonomous Micro Aerial Vehicles (MAVs), with a form factor of 10cm in diameter, are an emerging technology thanks to the broad applicability enabled by their onboard intelligence. However, these platforms are strongly limited in the onboard power envelope for processing, i.e., less than a few hundred mW, which confines the onboard processors to the class of simple microcontroller units (MCUs). These MCUs lack advanced security features opening the way to a wide range of cyber security vulnerabilities, from the communication between agents of the same fleet to the onboard execution of malicious code. This work presents an open-source System on Chip (SoC) design that integrates a 64 bit Linux capable host processor accelerated by an 8 core 32 bit parallel programmable accelerator. The heterogeneous system architecture is coupled with a security enclave based on an open-source OpenTitan root of trust. To demonstrate our design, we propose a use case where OpenTitan detects a security breach on the SoC aboard the MAV and drives its exclusive GPIOs to start a LED blinking routine. This procedure embodies an unconventional visual communication between two palm-sized MAVs: the receiver MAV classifies the LED state of the sender (on or off) with an onboard convolutional neural network running on the parallel accelerator. Then, it reconstructs a high-level message in 1.3s, 2.3 times faster than current commercial solutions.

Handling Pitch Variations for Visual Perception in MAVs: Synthetic Augmentation and State Fusion

E. Cereda, D. Palossi, and A. Giusti

in Proceedings of the 13th International Micro Air Vehicle Conference, pp. 59–65, 2022

@inproceedings{cereda2022handling,

author = {Elia Cereda and Daniele Palossi and Alessandro Giusti},

editor = {G. de Croon and C. De Wagter},

title = {Handling Pitch Variations for Visual Perception in MAVs: Synthetic Augmentation and State Fusion},

year = {2022},

month = {Sep},

day = {12-16},

booktitle = {13$^{th}$ International Micro Air Vehicle Conference},

address = {Delft, the Netherlands},

pages = {59--65}

}

Abstract

Variations in the pitch of a Micro Aerial Vehicle affect the geometry of the images acquired by its on-board cameras. We propose and evaluate two orthogonal approaches to handle this source of variability, in the context of visual perception using Convolutional Neural Networks. The first is a training data augmentation method that generates synthetic images simulating a different pitch than the one at which the original training image was acquired; the second is a neural network architecture that takes the drone’s estimated pitch as an auxiliary input. Real-robot quantitative experiments tackle the task of visually estimating the pose of a human from a nearby nanoquadrotor; in this context, the two proposed approaches yield significant performance improvements, up to +0.15 in the R2 regression score when applied together.

A Deep Learning-based Face Mask Detector for Autonomous Nano-drones

E. AlNuaimi, E. Cereda, R. Psiakis, S. Sugumar, A. Giusti, and D. Palossi

in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 36, no. 11, pp. 12903-12904, 2022

@inproceedings{alnuaimi2022deep,

title={A Deep Learning-Based Face Mask Detector for Autonomous Nano-Drones (Student Abstract)},

author={AlNuaimi, Eiman and Cereda, Elia and Psiakis, Rafail and Sugumar, Suresh and Giusti, Alessandro and Palossi, Daniele},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={36},

number={11},

pages={12903--12904},

year={2022}

}

Abstract

We present a deep neural network (DNN) for visually classifying whether a person is wearing a protective face mask. Our DNN can be deployed on a resource-limited, sub-10-cm nano-drone: this robotic platform is an ideal candidate to fly in human proximity and perform ubiquitous visual perception safely. This paper describes our pipeline, starting from the dataset collection; the selection and training of a full-precision (i.e., float32) DNN; a quantization phase (i.e., int8), enabling the DNN's deployment on a parallel ultra-low power (PULP) system-on-chip aboard our target nano-drone. Results demonstrate the efficacy of our pipeline with a mean area under the ROC curve score of 0.81, which drops by only ~2% when quantized to 8-bit for deployment.

Improving the Generalization Capability of DNNs for Ultra-low Power Autonomous Nano-UAVs

E. Cereda, M. Ferri, D. Mantegazza, N. Zimmerman, L. M. Gambardella, J. Guzzi, A. Giusti, and D. Palossi

in 2021 17th IEEE International Conference on Distributed Computing in Sensor Systems (DCOSS), pp. 327-334, 2021

@inproceedings{cereda2021improving,

title={Improving the generalization capability of DNNs for ultra-low power autonomous nano-UAVs},

author={Cereda, Elia and Ferri, Marco and Mantegazza, Dario and Zimmerman, Nicky and Gambardella, Luca M and Guzzi, J{\'e}r{\^o}me and Giusti, Alessandro and Palossi, Daniele},

booktitle={2021 17th International Conference on Distributed Computing in Sensor Systems (DCOSS)},

pages={327--334},

year={2021},

organization={IEEE}

}

Abstract

Deep neural networks (DNNs) are becoming the first-class solution for autonomous unmanned aerial vehicles (UAVs) applications, especially for tiny, resource-constrained, nano-UAVs, with a few tens of grams in weight and subten centimeters in diameter. DNN visual pipelines have been proven capable of delivering high intelligence aboard nanoUAVs, efficiently exploiting novel multi-core microcontroller units. However, one severe limitation of this class of solutions is the generalization challenge, i.e., the visual cues learned on the specific training domain hardly predict with the same accuracy on different ones. Ultimately, it results in very limited applicability of State-of-the-Art (SoA) autonomous navigation DNNs outside controlled environments. In this work, we tackle this problem in the context of the human pose estimation task with a SoA vision-based DNN [1]. We propose a novel methodology that leverages synthetic domain randomization by applying a simple but effective image background replacement technique to augment our training dataset. Our results demonstrate how the augmentation forces the learning process to focus on what matters most: the pose of the human subject. Our approach reduces the DNN’s mean square error — vs. a non-augmented baseline — by up to 40%, on a never-seen-before testing environment. Since our methodology tackles the DNN’s training stage, the improved generalization capabilities come at zero-cost for the computational/memory burdens aboard the nano-UAV.

Fully Onboard AI-powered Human-drone Pose Estimation on Ultra-low-power Autonomous Flying Nano-UAVs

D. Palossi, N. Zimmerman, A. Burrello, F. Conti, H. Müller, L. M. Gambardella, L. Benini, A. Giusti, and J. Guzzi

in IEEE Internet of Things Journal, vol. 9, no. 3, pp. 1913-1929, 2021

@article{palossi2021fully,

title={Fully onboard ai-powered human-drone pose estimation on ultralow-power autonomous flying nano-uavs},

author={Palossi, Daniele and Zimmerman, Nicky and Burrello, Alessio and Conti, Francesco and M{\"u}ller, Hanna and Gambardella, Luca Maria and Benini, Luca and Giusti, Alessandro and Guzzi, J{\'e}r{\^o}me},

journal={IEEE Internet of Things Journal},

volume={9},

number={3},

pages={1913--1929},

year={2021},

publisher={IEEE}

}

Abstract

Many emerging applications of nano-sized unmanned aerial vehicles (UAVs), with a few cm2 form-factor, revolve around safely interacting with humans in complex scenarios, for example, monitoring their activities or looking after people needing care. Such sophisticated autonomous functionality must be achieved while dealing with severe constraints in payload, battery, and power budget (~100mW). In this work, we attack a complex task going from perception to control: to estimate and maintain the nano-UAV’s relative 3-D pose with respect to a person while they freely move in the environment—a task that, to the best of our knowledge, has never previously been targeted with fully onboard computation on a nano-sized UAV. Our approach is centered around a novel vision-based deep neural network (DNN), called Frontnet, designed for deployment on top of a parallel ultra-low power (PULP) processor aboard a nano-UAV. We present a vertically integrated approach starting from the DNN model design, training, and dataset augmentation down to 8-bit quantization and deployment in-field. PULP-Frontnet can operate in real-time (up to 135 frame/s), consuming less than 87 mW for processing at peak throughput and down to 0.43 mJ/frame in the most energy-efficient operating point. Field experiments demonstrate a closed-loop top-notch autonomous navigation capability, with a tiny 27-g Crazyflie 2.1 nano-UAV. Compared against an ideal sensing setup, onboard pose inference yields excellent drone behavior in terms of median absolute errors, such as positional (onboard: 41cm, ideal: 26 cm) and angular (onboard: 3.7°, ideal: 4.1°). We publicly release videos and the source code of our work.